Introduction

In this article, we look at the issue of map data with an eye toward operational feasibility. If GIS map preparation is sloppy, you can end up with duplicates, overlapping polygons and/or gaps in the coverage. Moreover, we can make the situation even worse when we intersect these layers in GIS or try to group stands together using analysis area control.

Intersection Considerations

Best practices suggest that GIS layers represent similar types of information, such as stand boundaries, soil series or harvest units. A simple intersection of these types of information can result in a very large number of new polygons due to near-coincident lines. This can be a problem if available yield tables only represent a portion of those new combinations of attributes. So how do you avoid this?

Coverages are hierarchical in nature. Property boundaries, surveyed features like right-of-ways, etc. should be considered inviolate, and trump any other coverage. A clipping function should be applied to ensure that only stands within the boundary extents are counted.

Stand boundaries have an inherent fuzziness to them such that sliver removal procedures should not cause too much change overall. For soil series, I recommend that instead of a topological polygon intersection, clients perform a spatial overlay, assigning the stand polygons the majority soil type within each boundary. That way, there is only one soil type per stand, with consistency in site quality and productivity. In other words, only one yield table per stand.

Finally, consider what I call my "1%/1000 acre rule": if a development type is less than 1% of the total land base or less than 1000 acres (whichever is smaller), it is too small for consideration in the model. For example, if you are modeling a property of 500,000 acres, does it make sense to spend resources on yield table generation for 150 acres (0.03%)? Even if it contains the most valuable timber, its contribution to the objective function is going to be negligible. For small projects, the thresholds may not work as-is, but the concept still holds. You can, of course, choose different thresholds, but you shouldn't just accept every possible permutation of thematic attributes that is presented to you.

Harvest Blocking Considerations

For harvest unit boundaries, focus on retaining stand boundaries as much as possible when you do layout. An arbitrary straight line between two similar stands often will not cause problems. However, if the stands are very different in age, the solver may find it difficult to schedule the resulting harvest unit due to operability criteria. Why would this happen?

If you are using Woodstock's analysis area control feature (AAControl), you need to be aware that the a harvest unit or block can only be assigned a single action (or prescription, if using Regimes). While the scheduling is at the block level, operability remains at the DevType level. That means if you combine stands where the operability conditions for each member polygon do not overlap (due to age or activity differences), the entire unit is never feasible to harvest. Some examples, you ask? How about when you include an area of reforestation in a unit that should be harvested? Or you combine two stands for commercial thinning where one portion must be fertilized and another does not?

A Software Fix for Sloppy Work

When you set up analysis area units (AAUnits) in Woodstock, you want the model to choose a single activity for the entire unit, preferably all in one planning period. Early on when clients tried AAControl, a flurry of tech-support calls came in complaining that units were never being cut.

The culprit was sloppy layout, with incompatible stands grouped together. The solution was a software fix where units can be cut without 100% of the area treated. With this new approach, the units were scheduled but some of the areas within those units were not harvested. Ultimately, Remsoft set the default threshold for operability of AAUnits at 20% of the total area. Users can change that with higher or lower limits up to 100%.

Frankly, I think this default is way too low. Sure, the sloppy units don’t create tech support calls anymore, but if you only harvest a minor fraction of a unit, what is the point of using AAControl? Worse, I don’t recall reading anything about what happens to the stands that were not scheduled. So I decided to investigate this operational feasibility issue for myself.

How to Control Operability of AAUnits

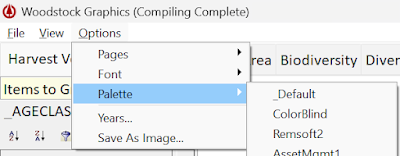

You activate AAControl and specify the threshold for operability of harvest units in Woodstock in the Control Section.

*AACONTROL ON ; minimum operable area is 20% of the unit are*AACONTROL ON aCC ; schedule only the aCC action using AAControl*AACONTROL ON 99% ; minimum operable area is 99% of the unit area

Remember, the default operability threshold is 20%. If you specify a threshold of 100%, all member polygons must be operable at the same time for Woodstock to create a decision variable for the unit. However, if some part of the unit is always inoperable, the unit will never be harvested. Conversely, if you accept the default, at least 20% of the area of a unit must be operable in order for Woodstock to generate a harvest choice for it. But that choice only includes the operable stands! The inoperable ones are omitted if that choice is part of the optimal solution. Look at the schedule section for omitted DevTypes:

0.017831 aCC 4 _Existing _RX(rxV) |Aaunit:U343| ;B982.2 100.0%31.863753 aCC 4 _Existing _RX(rxV) |Aaunit:U343| ;B982.6 100.0%0.034987 aCC 4 _Existing _RX(rxV) |Aaunit:U343| ;B982.3 100.0%68.775675 aCC 4 _Existing _RX(rxV) |Aaunit:U343| ;B982.4 100.0%1.688875 aCC 4 _Existing _RX(rxV) |Aaunit:U343| ;B982.7 100.0%5.554339 aCC 4 _Existing _RX(rxV) |Aaunit:U343| ;B982.5 100.0%0 aCC 4 _Existing _RX(rxV) |Aaunit:U343| ;B982.1 100.0% Area = 0.77953 not operable in 4

The last record shows that 0.77953 acres of unit U343 is not operable in period 4, so it was not harvested, even though 100% of it was scheduled as part of unit U343!

What Happens to the Inoperable Parts?

The example above comes from a solution where I set the AAControl threshold to 99%. Since the unit is larger than 100 acres and the inoperable portion is less than 1 acre, clearly the unit meets the threshold for operability.

The inoperable areas are part of a larger development type (DevTYpe) that is split between two harvest units (U343 and U338). If we scan for the inoperable DevType acres later in the harvest schedule, we only find the acres in unit U338, not the residual from U343. The residual part of U343 never appears in the schedule section again. Note, this is not due to a constraint where only harvest units count. Also, I cannot say for sure that this happens in every model, but I have seen this behavior many times over the years.

If the number of acres that remain unscheduled is small, in terms of operational feasibility, it may not be a source of concern. For example, I’ve seen lots of spatial data with small fragments of older stands scattered among large areas of recent clearcuts, so it isn’t uncommon. However, at some point you do need to harvest those areas. A model that continues to produce unharvested fragments may be problematic from an operational feasibility standpoint.

How to Avoid “Forgotten Areas”

We just saw that including stands of very different ages can be problematic for scheduling final harvests. Clearly, it is best to group similar stands together in harvest units. If that is not possible, at least grow them all long enough that the youngest and oldest cohorts are both within the operability window. It may make perfect sense to hold an old stand fragment well beyond its normal rotation for operational feasibility. To make that happen, the old stand must be a significant part of the harvest unit, and you must also set a high operability threshold.

However, similar issues can arise with commercial thinning even if the ages are similar. For example, suppose that you generated thinned yield tables with defined age (14-17) and volume removal (> 50 tons/ac) requirements. The first stand is currently 15 years old but doesn’t reach the minimum volume bar until age 17. The second stand is currently 16 years old and is OK to thin immediately. But you created a unit that includes some of both stands. Now, when scheduling a thinning, there is no operability window to treat both stands simultaneously: by the time the first stand is operable in 2 years (age 17), the other stand will be too old (age 18).

17 109.37 rCT 2 _Existing _RX(rxTH) |Aaunit:AA099| ;B82.3 100.0%16 0 rCT 2 _Existing _RX(rxTH) |Aaunit:AA099| ;B82.2 100.0% Area = 36.8500

not operable in 2

What's The Solution? Don’t Be Sloppy!

More care and attention to operability windows can avoid many problems. Don’t hardwire minimum volume limits when generating yield tables. What do I mean by this? Suppose you are generating yield tables for stands thinned from below to some basal area limit and you only generate a yield table if at least 50 tons/ac is produced. That works fine for harvesting stands, but when you use AAControl, that might cause some of your units to no longer be viable.

Instead, generate the tables accepting whatever volume results from the thinning. You can always add a minimum volume requirement to the *OPERABLE statement in Woodstock to avoid low-volume thinning. But, if there is no yield table for the low-volume stands, you can’t create one after the fact. Also, don’t set the threshold too high! Although the DevTypes in the unit determine the operability for an AAUnit, another portion of the unit can raise the average volume above the threshold for operational feasibility.

For example, in the figure above, the magenta-colored polygons in the unit shown do not meet the 20 MBF/ac threshold desired. However, the blue areas exceed the bar by a significant amount. In fact, the overall average for the unit easily exceeds 20 MBF/ac. So, rather than set the minimum volume needed to 20 MBF/ac, maybe set it at 15 so the low volume portions of the block can still be harvested. You can still apply a constraint requiring at least 20MBF/ac harvested on average.

Suggestions for Better Operational Feasibility

Be careful with GIS overlays!

- Avoid the creation of near-coincident lines and slivers.

- Use spatial overlays to assign soil attributes to stand polygons.

- Follow stand boundaries as much as possible when delineating harvest units.

- Split stands only when necessary and into significant portions, not slivers.

Review proposed units to make sure they make sense!

- Don’t combine incompatible stands within the same unit (age 5 + age 200 is not a good combo)

- Provide yield tables and timing choices for member DevTypes that overlap in operability.

- Remember that DevType operability determines unit operability, not the weighted average of the unit. Choose appropriate thresholds.

- Don’t accept the Woodstock default of 20% for AAControl! If you can't harvest the majority of a harvest block, then the block is poorly laid out. Fix it!

Having troubles with spatial planning? Contact Me!

If you are concerned about the operational feasibility of your harvest planning models, give me a shout. We can schedule a review and work on some improvements that will provide more confidence in your results.